- AI-Native Training

- SAFe Training

- Choose a Course

- Public Training Schedule

- SAFe Certifications

- Leading SAFe

- SAFe Scrum Master

- SAFe Product Owner/Product Manager

- SAFe Lean Portfolio Management

- SAFe Release Train Engineer

- Implementing SAFe

- Advanced SAFe Practice Consultant

- Advanced Scrum Master

- SAFe for Architects

- SAFe for Hardware

- SAFe for Teams

- Agile Product Management

- SAFe DevOps

- SAFe Agile Software Engineering

- Leading SAFe for Government

- SAFe Micro-credentials

- Agile HR Training

Facilitating SAFe Team Self-Assessments

As part of an Agile Release Train’s commitment to relentless improvement, it is necessary for all the teams on the train to reflect and assess the effectiveness of their Scrum and XP practices on a regular cadence. For most once a PI seems to be a logical frequency. The Scaled Agile Framework provides a self-assessment tool to support this process and makes it freely available for download at: https://www.scaledagileframework.com/metrics/

I have found clients often want to do self-assessments by sending them out as an online survey for team members to complete individually. Personally, I am not keen on this approach for a number of reasons. Firstly, most new agile teams don’t have a clear and consistent understanding of what good looks like, therefore, they tend to overstate their level of maturity. (The first time we conducted a self-assessment with the EDW Release Train, the most mature team gave themselves the lowest score and the least mature teams gave themselves the highest score!)

Secondly, by completing online surveys the team doesn’t have an opportunity to discuss their different perspectives and reach a shared understanding. In my experience self-assessments provide an excellent coaching opportunity, especially if you are the only coach supporting an ART and doing so part-time. Often this can be as simple as reminding them of what a 5 looks like and resetting their anchors. Even though an RTE can take a DIY approach to this, an external facilitator can be very valuable and as a coach, this is your opportunity to ensure the assessment is only used for good and not evil.

Last year I was getting ready to facilitate the first round of self-assessments for a new train and I got thinking about my approach to facilitating these sessions. My priority was to ensure that every team member got an opportunity to express their individual point of view. Which led me to contemplate how Planning Poker uses the simultaneous reveal to prevent anchoring. One idea I had was to create cards numbered with a 0 to 5 rating scale, but that felt a little boring. Then it came to me - the perfect combination of silent writing on post-it notes and a big visible information radiator…

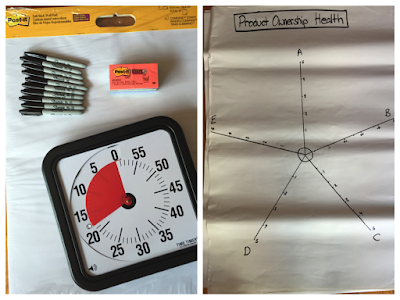

During my lunch break, I raced out to Officeworks and purchased a box of Sharpies, an 8-pack of Super Sticky Post-its and a pad of butcher's paper. I made it back to the office and found the meeting room with moments to spare. I quickly drew a large star, like the axes of a radar chart, on 5 sheets of butcher's paper I gave each poster a heading as per the areas in the SAFe Self Assessment: Product Ownership Health, PI/Release Health, Sprint Health, Team Health And Technical Health. I then labelled each axis A through E and marked the numbers 1 through 5 along each axis. I then took the 6th piece of poster paper and wrote up the rating scale. I attached the posters to the wall, put a post-it pad and sharpie out for each team member and waited for the team to arrive.

Once the team had settled in, I provided a brief introduction, reminding everyone that the purpose of self-assessment is to reflect on where the team is at and identify opportunities for improvement. The self-assessments would not be used to compare teams, nor would they be used by management to “beat up” the teams. Then came the instructions: “We are going to work through each of the five sections using the following approach. Each section has five statements, which I have labelled A through E. As I read out each statement you will need to write the letter corresponding to the statement and your score using the rating scale provided. This will be a silent writing exercise. Once everyone has provided an assessment for all 5 statements for a given area, you will each place your responses on the chart. Then we will go through and discuss the responses and reach a consensus on the overall score for the team.”

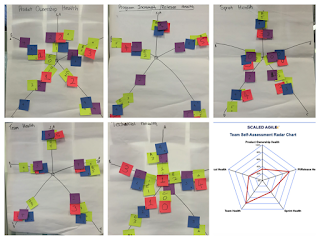

Section by section the teams created visualisations reflecting their assessment of the current state. On some aspects, the team was close to 100% aligned and on others, their opinions could not have been more varied. As each section was completed I facilitated a discussion with the group about the results. Where there were clear outliers I would start by asking for someone to comment on them. For the most part, the discussions tended to result in convergence on a shared assessment, that I could record in the template. When the team struggled to reach a consensus, I ask them to “re-vote” by holding up the number of fingers that reflect their current view and I recorded the mode. Once I had completed all five assessment rounds, I also had the data to complete the summary radar chart.

The first few times I used this approach I struggled with the time box and did not leave enough time for the most important step: identifying the actions the team would take to improve. These days I use a two-hour time box and always make sure the team leaves the session having committed to actioning their key learnings. I like to try and get one improvement focus or action from each of the 5 areas.

I think one of the strengths of this approach is its alignment to the Brain Science about how adults learn. In her book Using Brain Science To Make Training Stick, Sharon Bowman, creator of ‘Training From the Back of the Room’ talks about six learning principles that trump traditional teaching:

- Movement trumps Sitting: In order to learn the brain needs oxygen. The best way to get oxygen to the brain is to move.

- Talking trumps Listening: The person doing the most talking is doing the most learning.

- Images Trump Words: The more visual the input is the more likely it is to be remembered.

- Writing trumps Reading: The team will remember anything they write longer than anything you write.

- Shorter trumps Longer: People will generally check out within 20 minutes.

- Different trumps Same: The brain quickly ignores anything that is repetitive, routine or boring.

This approach to facilitating self-assessments includes Movement in the form of standing up and placing answers on the chart; Talking in the form of a discussion that the team have amongst themselves on the results; Images in the form of posters; Writing in the form of the written response; Shorter in the form of the 20-minute time box for each area, and; Different to filling out an online survey!

As the SAFe Self-Assessment is very practice-centric, teams will probably outgrow it as they move through Shu-Ha-Ri. You will probably also find that after the first assessment, results will often go down rather than up as teams being to understand what good looks like and they hold themselves to a higher standard. As a rule, I am not a fan of “agile maturity” surveys, as they have a tendency to end up on performance dashboards and scorecards resulting in teams being pressured to improve their scores and the system most likely being gamed. The real value is in the conversations. Reaching a shared understanding of each team member’s experience and consensus on the next actions the team should take as part of their commitment to relentless improvement.

If you would like to try this approach yourself, you can access the facilitation guide here.